At its core, data science is the art of telling stories with data. It’s a field that blends scientific methods, powerful algorithms, and a bit of detective work to pull meaningful insights out of the noise of raw information.

Think of it as a mashup of a statistician, a software engineer, and a business strategist all rolled into one. The goal isn’t just to look at data; it’s to understand it, question it, and use it to predict what might happen next.

Your Introduction to the Field

So, what does a data scientist actually do? Imagine you’re a modern-day detective sifting through mountains of digital clues—emails, website clicks, social media posts, and sales figures. Your job isn’t to solve a crime, but to spot hidden trends and forecast future behavior. That’s the essence of data science.

It’s all about asking smart questions and then digging into the data to find the answers. This field moves way beyond just reporting on what happened in the past. It’s about figuring out why it happened, predicting what’s likely to come next, and even suggesting the best course of action to take. This forward-looking approach is what makes it so valuable.

The Core Components of Data Science

Data science isn’t just one single skill; it’s a powerful mix of several distinct disciplines. To help break it down, let’s look at the key pillars that hold everything up.

Key Pillars of Data Science at a Glance

| Component | Simple Description | Primary Goal |

|---|---|---|

| Statistics & Mathematics | The foundational principles for making sense of numbers. | To test ideas, find relationships, and build reliable models. |

| Computer Science & Programming | The technical toolkit for handling data at scale. | To collect, clean, store, and process massive datasets efficiently. |

| Domain Expertise | In-depth knowledge of a specific industry (e.g., finance, healthcare). | To ask the right questions and translate data findings into real-world value. |

Each pillar is crucial. Without the right context from domain expertise, a technically perfect model might solve a completely irrelevant problem. And without strong programming skills, a brilliant statistical insight might never work on the massive datasets businesses have today.

A data scientist is often described as someone who is better at statistics than any software engineer and better at software engineering than any statistician.

This unique combination is the secret sauce. It allows them to build solutions that are not only technically sound but also statistically solid and relevant to the business.

From Raw Numbers to Smart Decisions

Ultimately, the entire point of data science is to help people and organizations make better, more informed decisions. It’s not about generating complex charts for the sake of it; it’s about delivering clear, actionable insights that drive real-world outcomes.

For a business, this could mean anything from identifying at-risk customers to optimizing a supply chain or personalizing a user’s app experience. It’s about replacing guesswork with data-driven confidence.

Tracing the Roots of Modern Data Science

To really get a handle on data science, it helps to look back at how we got here. The field didn’t just pop into existence. It grew over decades, born from a simple but powerful question: what could we learn if we combined the deep-thinking of statistics with the raw processing power of computers?

This idea—marrying human analysis with machine computation—planted the seeds for everything that followed. The early visionaries weren’t just thinking about faster spreadsheets; they imagined an entirely new way of making sense of the world through data.

The Statistical Foundation

Long before anyone used the term “data science,” statisticians were laying the groundwork. They developed powerful methods for drawing solid conclusions from limited information, a skill that’s still central to the discipline today. The main limitation, of course, was that complex calculations had to be done by hand.

The real shift came as computing technology started to catch up. As early as 1962, forward-thinkers like John Tukey were already arguing for a new scientific field that would merge statistical methods with computers in his paper ‘The Future of Data Analysis’. Fast forward to 2001, and William S. Cleveland’s paper, ‘Data Science: An Action Plan,’ was a direct call to start training a new generation of analysts for this emerging digital world. You can actually see a detailed timeline of these foundational moments and how each step built on the last.

This evolution was about more than just doing things faster. It was about doing things bigger. Computers could chew through datasets far larger and more complex than any person could ever hope to, unlocking completely new avenues for discovery.

The goal was to create a discipline that was more practical and outward-looking than traditional statistics, yet more grounded in scientific principles than pure computer science.

At its core, this new field would be all about solving real problems by letting the data lead the way.

The Big Data Explosion

The early 2000s brought another massive change: the arrival of “big data.” The internet unleashed a firehose of information, generating more data in a day than we used to collect in decades. All of a sudden, companies were swimming in user clicks, social media posts, and sensor readings.

This created a huge problem. The old databases and analytical tools just buckled under the sheer volume, variety, and velocity of all this new information. We needed a completely new toolkit to store and process data on a scale nobody had ever seen before.

That’s where technologies like Apache Hadoop, first released in 2006, changed the game. Hadoop gave us a way to spread massive datasets across clusters of regular, off-the-shelf computers, which made large-scale data processing affordable for the first time. This was the key that finally unlocked the true potential of big data.

The Convergence with AI and Machine Learning

Once we could process all that data, the final piece of the modern data science puzzle clicked into place: machine learning. The algorithms themselves weren’t new—some had been around for years—but they were hungry for two things: massive amounts of data and serious computing power. The big data era delivered both.

This powerful combination created the field of data science as we know it. Now, data scientists could go beyond just analyzing what happened in the past. They could build models that learn from historical data to make incredibly accurate predictions about the future.

This potent mix of big data and machine learning is the engine behind many of the tools we now take for granted:

- Recommendation Engines: The systems that suggest what you should watch on Netflix or buy on Amazon.

- Fraud Detection: The algorithms that instantly flag a suspicious transaction on your credit card.

- Medical Diagnostics: AI models that help doctors spot diseases in X-rays or MRI scans with greater accuracy.

From its roots in statistical theory to its current home at the center of artificial intelligence, data science has always been about one thing: turning raw information into useful insight. It’s a field built on a history of constant innovation, all driven by that timeless human desire to find meaning in the world around us.

Understanding the Core Components of Data Science

To really get what data science is all about, we need to pop the hood and look at the moving parts. It’s not one single skill; it’s a powerful mix of different fields working together. Think of it like a high-performance engine—each component has a specific job, but the real power comes from how they connect and fire in unison.

This blend of disciplines is what makes data science so effective. By combining different types of expertise, we can tackle complex problems from multiple angles and arrive at solutions that are both creative and dependable.

Let’s break down the four pillars that form the foundation of data science.

Statistics: The Grammar of Data

At its very heart, data science is built on the bedrock of statistics and mathematics. This is the grammar that lets us read, interpret, and make sense of the stories hidden in the numbers. Without a solid statistical footing, data is just noise.

Statistics gives us the tools to separate a meaningful signal from random chance. It helps us measure uncertainty, test our assumptions, and build models that we can actually trust. It’s the framework that ensures the insights we find aren’t just flukes but are grounded in sound scientific principles.

Here’s what statistics brings to the table:

- Descriptive Statistics: This is about summarizing data to get a quick snapshot—calculating the mean, median, mode, and so on.

- Inferential Statistics: This is where we use a smaller, manageable sample of data to make smart guesses about a much larger group.

- Probability Theory: This helps us measure the likelihood of different outcomes, which is crucial for managing risk and making forecasts.

Ultimately, statistics provides the rigor that turns raw observations into credible, defensible conclusions.

Machine Learning: The Predictive Engine

If statistics is the grammar, then machine learning (ML) is the engine that drives prediction and automation. ML is all about algorithms that learn patterns from past data without needing to be programmed for every single possibility. This is the part of data science that’s focused on answering, “What’s going to happen next?”

This is where things start to feel a bit like science fiction. An ML model can sift through thousands of variables to predict which customers might leave, spot a fraudulent transaction in milliseconds, or even help diagnose diseases from medical scans. And the best part? These models get smarter and more accurate as they are fed more data.

Machine learning gives a computer the ability to learn without being explicitly programmed. It’s the art of teaching computers to find patterns that humans might miss, operating at a scale and speed we simply can’t match.

For example, when an e-commerce site recommends a product, its machine learning model isn’t just looking at what you bought yesterday. It’s analyzing your entire click history, how long you hovered over an item, and what other people like you have purchased to make a highly personalized suggestion.

Data Engineering: The Essential Plumbing

All the brilliant statistical analysis and powerful machine learning models in the world are completely useless without clean, accessible data. This is where data engineering comes in. It’s the critical, often invisible, plumbing that makes everything else work.

Data engineers are the architects who design, build, and maintain the systems that gather, store, and process massive amounts of information. They create “data pipelines” that grab raw data from all its different sources, scrub it clean, reshape it into a usable format, and deliver it right where the data scientists need it.

It’s no surprise that data scientists often report spending up to 80% of their time just cleaning and preparing data. This highlights just how crucial good data engineering is. Without it, data stays trapped in silos—messy, unreliable, and pretty much useless.

Domain Expertise: The Guiding Compass

The final piece that ties everything together is domain expertise. This is just a fancy way of saying you need to actually understand the world you’re analyzing—whether that’s finance, healthcare, marketing, or manufacturing. This knowledge acts as a compass, making sure all the technical work is aimed at solving a real, meaningful problem.

A data scientist with deep domain expertise knows which questions are worth asking in the first place. They can look at a result and understand its practical implications for the business. They can spot when a statistically significant finding is actually meaningless in the real world or notice subtle clues in the data that a pure technologist would completely miss.

This is what turns a technically correct model into a valuable business asset. It’s the difference between building a model that predicts customer behavior with 95% accuracy and building one that actually helps the company grow its revenue.

These four components—statistics, machine learning, data engineering, and domain expertise—aren’t just related; they are completely intertwined. True data science happens at the intersection where all four meet. To help clarify how these roles differ, here’s a quick comparison.

Data Science Disciplines Compared

| Discipline | Primary Focus | Key Skills | Common Tools |

|---|---|---|---|

| Data Analyst | Interpreting past data to answer “what happened?” | SQL, data visualization, statistics, communication | Tableau, Power BI, Google Sheets |

| Data Scientist | Using data to predict future outcomes and build models. | Python/R, machine learning, statistics, data storytelling | Scikit-learn, TensorFlow, Jupyter |

| Data Engineer | Building and maintaining systems to collect and process data. | SQL, Python/Scala, cloud platforms, ETL, data warehousing | Apache Spark, Airflow, AWS, Snowflake |

| ML Engineer | Deploying, monitoring, and scaling machine learning models in production. | Software engineering, Python, MLOps, cloud computing | Kubernetes, Docker, Kubeflow, SageMaker |

As you can see, while each role has its specialty, they all rely on each other to bring a data project from an idea to a real-world application.

How a Data Science Project Actually Works

A data science project is way more than just plugging numbers into an algorithm. It’s a structured journey that starts with a fuzzy business question and ends with a concrete, working solution.

Think of it like building a custom car. You don’t just start welding parts together and hope for the best. You figure out what you want the car to do first, draw up a blueprint, source the right materials, assemble the engine, and then test it rigorously before it ever hits the open road.

That’s exactly how a good data science initiative works. It follows a well-defined lifecycle where each stage builds on the last. This methodical approach ensures the final product isn’t just technically cool but is genuinely useful in the real world. It keeps teams from chasing irrelevant questions or building models that don’t solve the problem they were meant to.

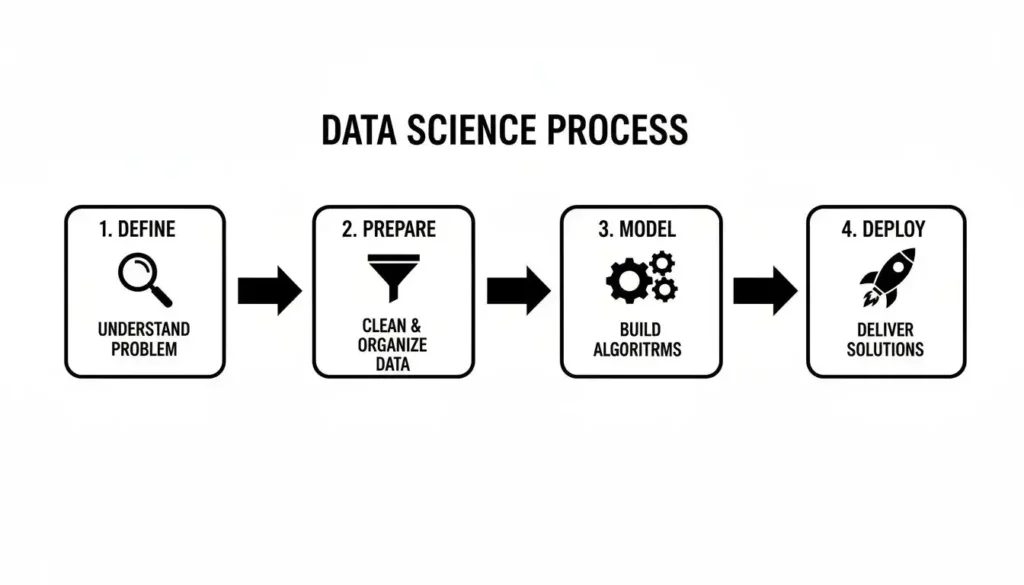

This flowchart gives you a high-level view of the typical process, from defining the goal to getting the solution out the door.

As you can see, it’s a clear progression. Data science is a discipline, not a chaotic mess of data exploration. Let’s walk through what each of these steps actually looks like on the ground.

Defining the Business Objective

Before anyone writes a single line of code, the most crucial step is to define what success even means. What problem are we really trying to solve here? A clear objective becomes the North Star for the entire project, guiding every single decision that comes after.

This isn’t about vague requests like “we need more data” or “let’s build a machine learning model.” It’s about framing a specific, measurable business goal. For example, instead of a fuzzy request to “improve customer retention,” a sharp objective would be: “Build a model that can predict which subscribers are most likely to cancel their service in the next 30 days, with at least 85% accuracy.”

Getting this right is everything. It makes sure the team is solving a problem the business actually cares about.

Data Acquisition and Cleaning

With a clear goal in hand, it’s time to gather the raw ingredients—the data. This stuff can come from all over the place: the company’s internal databases, third-party APIs, public datasets, or even by scraping websites.

But here’s the catch: raw data is almost never ready to use. It’s messy, incomplete, and full of errors. This is where data cleaning, or data wrangling, comes in. It’s a painstaking process that can involve:

- Handling missing values: Figuring out whether to ditch records with missing info or to fill in the gaps using statistical methods.

- Correcting inconsistencies: Standardizing formats, like making sure all dates follow the same structure (e.g., MM/DD/YYYY).

- Removing duplicates: Finding and deleting redundant entries that could throw off the whole analysis.

This stage is famously time-consuming, but it’s absolutely non-negotiable. Feeding a model dirty data is like putting contaminated fuel in a high-performance engine. The results will be unreliable at best and disastrous at worst.

There’s an old rule of thumb in this field: data scientists spend roughly 80% of their time collecting, cleaning, and preparing data. Only 20% is spent on the “fun” part of building and training models.

That stat really drives home how critical a clean, well-structured dataset is. It’s the foundation for everything.

Exploratory Data Analysis and Model Building

Once the data is clean, the real discovery can begin. This is the Exploratory Data Analysis (EDA) phase. Data scientists use visualization tools and statistical techniques to poke and prod the data, searching for patterns, hidden relationships, and strange outliers. The goal here is to build an intuition for the dataset and start forming hypotheses.

With some initial insights, the team moves into model building. Based on the project’s objective, they’ll pick the right kind of machine learning algorithm. For instance, if the goal is to predict a number (like a house price), they might use a regression model. If it’s to classify something into a category (like spam vs. not spam), they’d grab a classification model.

This is rarely a one-shot deal. It’s an iterative process where they’ll often train several different models and then compare how they perform to find the one that best solves their specific problem.

Model Evaluation and Deployment

A model that performs perfectly on the data it was trained on isn’t necessarily a good model. It might have just “memorized” the answers without actually learning the underlying patterns. That’s why the next step, model evaluation, is so important.

The team tests the model on a fresh set of data it has never seen before. This is the true test of how well it will perform in the real world. They’ll calculate key metrics like accuracy, precision, and recall to make sure the model meets the business objective defined way back in step one.

If the model passes the test, it’s ready for deployment. This means plugging it into a live business process. A fraud detection model, for example, would be connected to the company’s real-time transaction system, where it can flag suspicious activity as it happens. But the work doesn’t stop there. The model’s performance has to be constantly monitored to make sure it stays accurate and effective over time.

Seeing Data Science in Your Daily Life

It’s easy to think of data science as some abstract concept, something that happens in high-tech labs or the boardrooms of Silicon Valley. But the truth is, it’s working quietly in the background of your life every single day.

From the moment you scroll through your phone in the morning to the show you binge-watch at night, data science is the invisible engine making it all happen. Once you start to see it in action, the answer to “what is data science?” becomes much clearer.

Let’s look at a few examples that are probably already part of your daily routine.

Your Entertainment Is Powered by Data

Ever finish a series on Netflix and wonder how it just knows what you’ll want to watch next? That’s not a lucky guess. It’s the result of some seriously sophisticated data science.

Every time you watch a show, hit pause, or even just hover over a title card, you’re creating a data point. Netflix’s recommendation algorithm churns through that information, along with the viewing habits of millions of others, to find subtle patterns. It’s constantly learning what keeps people like you hooked.

In fact, this system is responsible for over 80% of all content streamed on the platform. It’s a masterclass in using data to build a personalized experience that feels almost magical.

Data science acts as a personal curator, sifting through a massive library of content to find the hidden gems it thinks you’ll love. It turns an overwhelming number of choices into a manageable and relevant list.

The same idea is at play on other platforms you use constantly. That “Discover Weekly” playlist from Spotify or the next video queued up on YouTube are both products of data science trying to perfectly match content to your taste.

Protecting Your Finances in Real Time

Data science also acts as a silent bodyguard for your wallet. When you swipe your credit card, a powerful machine learning model analyzes the transaction in milliseconds to figure out if it’s really you.

So, how does it work? Banks train these models on billions of past transactions, teaching them to recognize your normal spending habits. The models learn to spot anomalies that scream “fraud,” such as:

- A purchase from a strange location you’ve never been to.

- A transaction that’s way bigger than your usual spending.

- A string of rapid-fire purchases in a short amount of time.

If a purchase seems off, the system flags it. It might get declined on the spot, or you might get an instant text message asking you to confirm the transaction. This real-time fraud detection saves consumers and banks billions of dollars every year.

Transforming Healthcare and Logistics

Beyond entertainment and finance, data science is having a huge impact in critical areas like healthcare and how goods get from point A to point B. The sheer volume of data being created is staggering—global data production is expected to top 181 zettabytes by 2025. This mountain of information is what fuels breakthroughs, from predictive models that now influence 80% of healthcare decisions to logistics optimizations that save companies a fortune. You can learn more about how we got here by exploring a historical timeline and modern impact of big data.

In medicine, for example, data scientists analyze patient records, genetic data, and clinical trial results to predict disease outbreaks or create personalized treatment plans. A model might identify patients at high risk for a certain condition, allowing doctors to step in before it becomes a problem.

And think about how you get your packages. E-commerce giants like Amazon rely on data science to manage their entire supply chain. Their algorithms predict which products will be popular in your area, making sure they’re stocked in a nearby warehouse. They even calculate the most efficient delivery routes for drivers, which is why your package shows up on your doorstep so quickly.

Got Questions? We’ve Got Answers

As you’ve been reading, a few common questions might have popped into your head. It’s totally normal. Let’s tackle some of the most frequent ones to clear up any confusion and give you some practical advice.

Do I Really Need a PhD to Be a Data Scientist?

Not necessarily, and that’s a common misconception. While a PhD is often a must-have for very specific research roles (think developing brand new algorithms at a company like Google), most data science jobs in the business world prioritize hands-on, practical skills.

Honestly, a killer portfolio that shows you can tackle real-world problems is often more impressive than an advanced degree. If you can demonstrate that you know your way around Python, can clean up a messy dataset, build a working model, and explain what you found to a non-technical audience, you’re already ahead of the game. Companies want people who can solve problems and deliver value, and you don’t always need a doctorate for that.

What’s the Real Difference Between Data Science and Data Analytics?

This one trips a lot of people up. Let’s break it down with a simple analogy.

Think of a data analyst as a historian. They look at past data to figure out what happened and why. They’re masters at answering questions like, “What were our sales figures last quarter, and which regions underperformed?” It’s all about describing the past and present.

A data scientist, on the other hand, is more like a fortune teller with a toolkit. They look at the same historical data, but their goal is to build models that predict the future. They answer questions like, “Which customers are most likely to churn next month, and what can we do to stop them?” It’s a forward-looking role that involves more advanced programming and machine learning to create something new, not just report on what’s already occurred.

Data Analytics: Explains what happened. Data Science: Predicts what will happen and how to influence it.

Which Programming Language Should I Learn First?

If you’re just starting out, the answer is almost always Python. It’s the undisputed champion for a reason. Its syntax is clean and relatively easy to pick up, which is a huge plus when you’re trying to learn a lot of new concepts at once.

But the real magic of Python is its incredible ecosystem of libraries built specifically for data work. You’ll quickly meet (and love) tools like:

- Pandas: Your new best friend for cleaning, slicing, and dicing data.

- Scikit-learn: A powerhouse library for building just about any machine learning model you can think of.

- Matplotlib & Seaborn: For creating the charts and graphs you need to actually see the patterns in your data.

While R is another fantastic language, especially for heavy-duty statistics, Python’s versatility and vast community support make it the perfect place for most aspiring data scientists to start their journey.

At maxijournal.com, we publish daily writing on science, technology, and more to keep you informed. Explore our latest articles to stay ahead of the curve.